AI Can Run Your Ecommerce Customer Support

The Problem Every Online Store Faces

Customers expect instant answers — “Where’s my order?”, “Can I change my shipping address?”, “Do you have this in stock?”

Traditional live chat systems struggle to keep up. Even with 24/7 support teams or chatbots, responses often feel robotic, and handling real data (like orders, returns, or account details) is difficult without custom development.

With rapid progress in agentic intelligence over the past few months, today’s AI systems are far more reliable and less error-prone than they were a year ago.

The New Era of AI-Driven Support

Large Language Models (LLMs), like GPT-based assistants, can now do much more than generic chat. When embedded securely into your ecommerce stack, they can:

Access live order data to give real-time updates.

Perform actions such as editing an order or processing a refund.

Upsell and cross-sell intelligently, based on customer history.

Handle unlimited conversations — reducing support costs dramatically.

In short: AI becomes not just a help desk, but a virtual sales and support rep that’s awake 24/7.

What “Embedding” Really Means

Embedding an LLM doesn’t mean replacing your website with ChatGPT. It means integrating an AI brain inside your existing site, powered by your data and business rules.

Here’s the flow in simple terms:

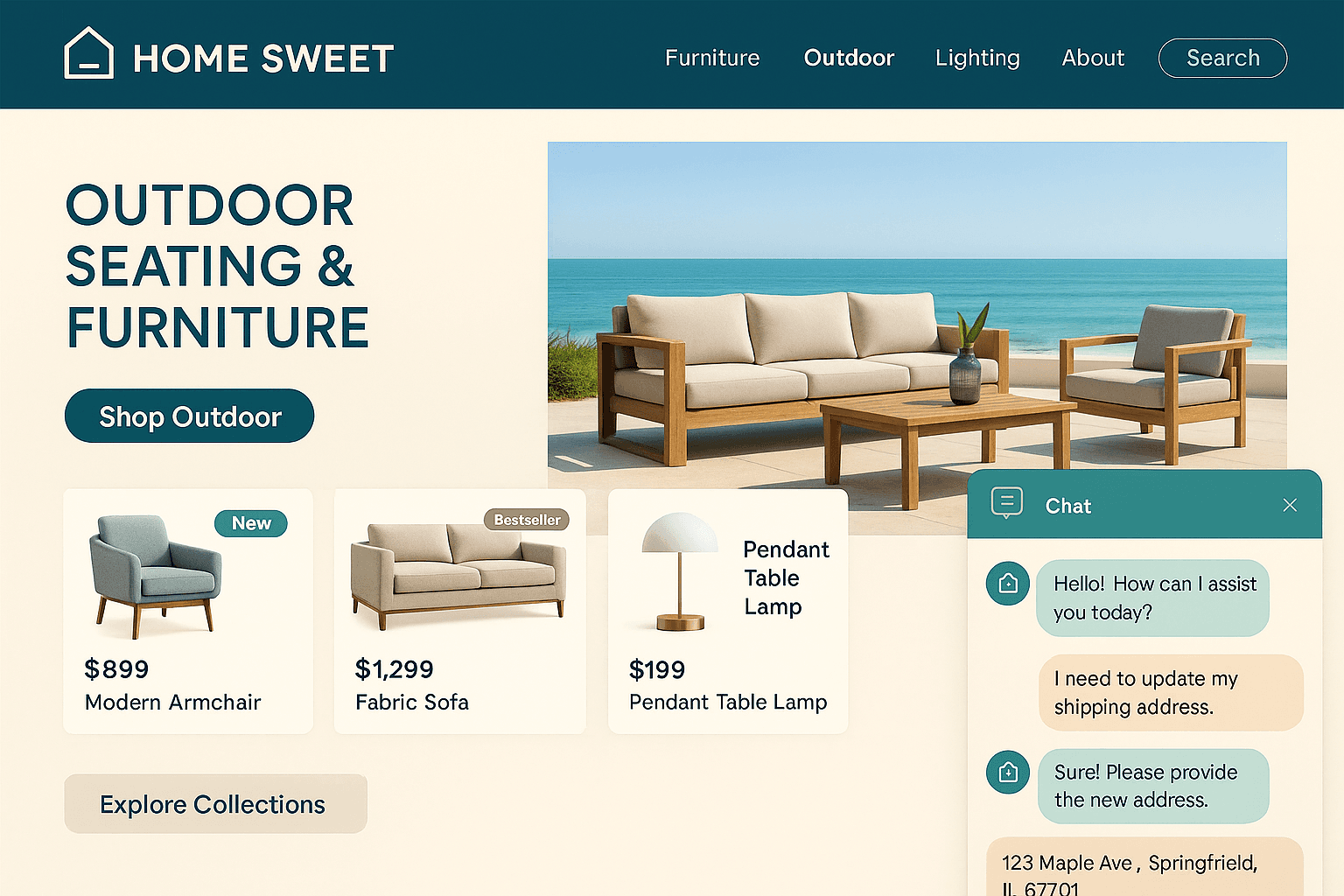

A customer opens your website and types a message like, “Hey, I need to update my shipping address on my last order.”

The AI assistant:

Authenticates the user via your website’s login.

Retrieves their order details from your database or ecommerce platform (e.g., Shopify, WooCommerce, Magento, or your own backend).

Responds conversationally with the order status and options.

Offers to help complete the change or connect to a human if needed.

The system logs the entire exchange securely — with no sensitive data ever leaving your backend.

Why It Works for Business Owners

Instead of building dozens of support flows and FAQ forms, you connect a single intelligent interface that can handle most conversations naturally.

Benefits

Reduced Support Volume: The AI handles 60–80% of repetitive requests automatically.

Faster Resolution: Instant answers without queue times.

Higher Retention: Personalized tone and quick solutions build trust.

Cross-Sell Potential: “While updating your order, did you want to add another of that item?”

And since it integrates with your existing order database, you stay in full control.

How It’s Built (Conceptually)

Without diving too deep into developer code, here’s what’s happening behind the scenes:

Frontend: The chat interface — on your site or mobile app — sends the user’s message to your server.

Backend: Your system (for example, built with Symfony or Next.js) forwards the request to the AI model via a secure API key.

AI Layer: The LLM processes the message, consults your order system API for real data, and generates a conversational reply.

Streaming Responses: The answer appears in the chat as it’s being typed — just like a live support agent.

Optional Human Escalation: If the AI detects complexity (like a dispute or escalation keyword), it alerts your team.

Essentially, it’s your website — but with a brain.

Example Use Cases

Order tracking: “Where’s my order?” → The AI fetches the latest tracking info and estimated delivery.

Order modification: “Can I change my address?” → The AI validates if it’s still editable and that we authenticated the user, then updates the order record.

Returns/refunds: “I want to return my shoes.” → The AI checks the order, confirms eligibility, and sends a return label.

Product discovery: “Show me similar items under $50.” → The AI filters your catalog dynamically.

Upselling: “You bought X — people who liked that also bought Y.” → Intelligent recommendations based on historical data.

Security & Trust Are Non-Negotiable

Any AI system handling orders and personal data must be:

Hosted behind your authentication (so only verified customers can use it).

Compliant with data privacy laws (GDPR, CCPA).

Transparent about limits (AI shouldn’t confirm a refund that requires approval).

The LLM itself only processes the text needed to understand the request — not your entire database.

What It Takes to Implement

If you already have a modern ecommerce backend (like Shopify Plus, a Symfony API Platform, or a custom Next.js API), adding this capability is surprisingly doable.

A typical setup includes:

A chat widget on your site (React or Web Components).

A server route to securely call the AI model.

Integration with your orders API and authentication system.

A caching layer to speed up product lookups.

Analytics dashboards to track resolved requests and escalation rates.

An account with an AI service, API key, and credits.

The investment is usually offset in months through reduced ticket volume and improved conversions. AI costs are slowly improving as time goes on and currently very low for options like chat gpt-5.

The Bottom Line

AI chat isn’t just a “nice-to-have” anymore — it’s the new expectation.

Embedding an LLM directly into your ecommerce stack turns your store into an intelligent, self-service experience.

Your customers get faster help.

Your team gets fewer repetitive tasks.

Your business gets smarter with every conversation.

Pro Tip

Start small — launch your AI chat for order updates only, then expand to handle returns, product questions, and upsells.

Each new data integration multiplies the AI’s usefulness and your ROI.